[2020-07-12]Challenging Sequential Bitstream Processing via Principled Bitwise Speculation

Published:

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Published:

Published:

Published:

Published:

Published:

Page not found. Your pixels are in another canvas.

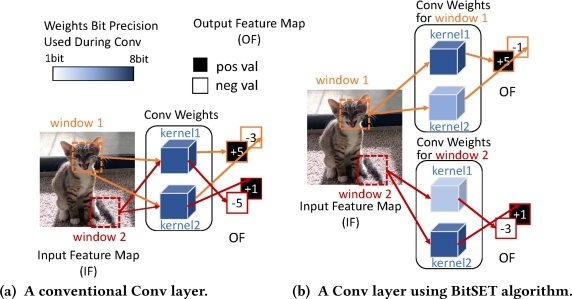

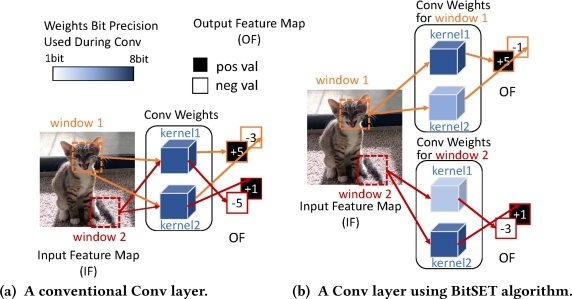

A software-hardware co-design to accelerate DNNs

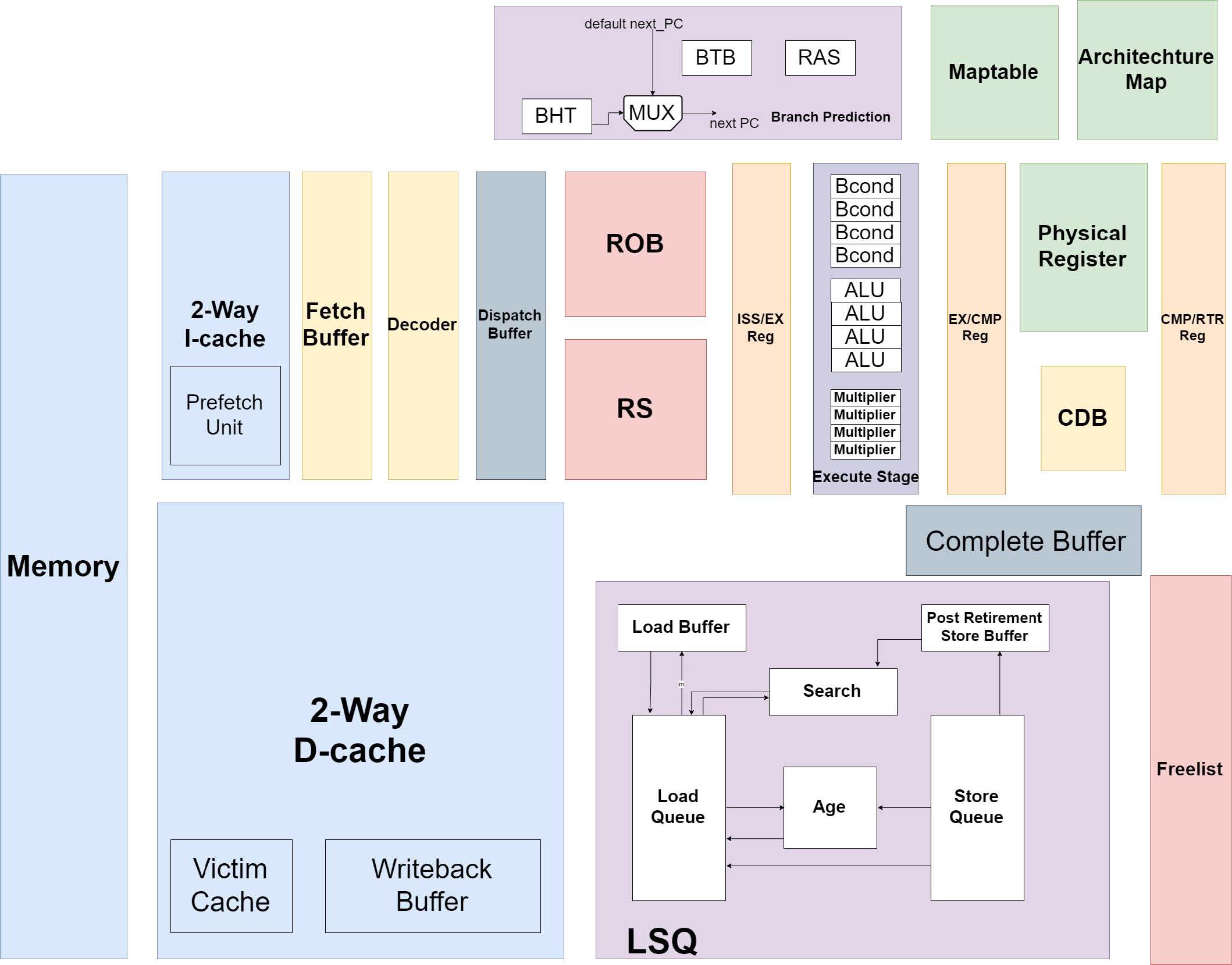

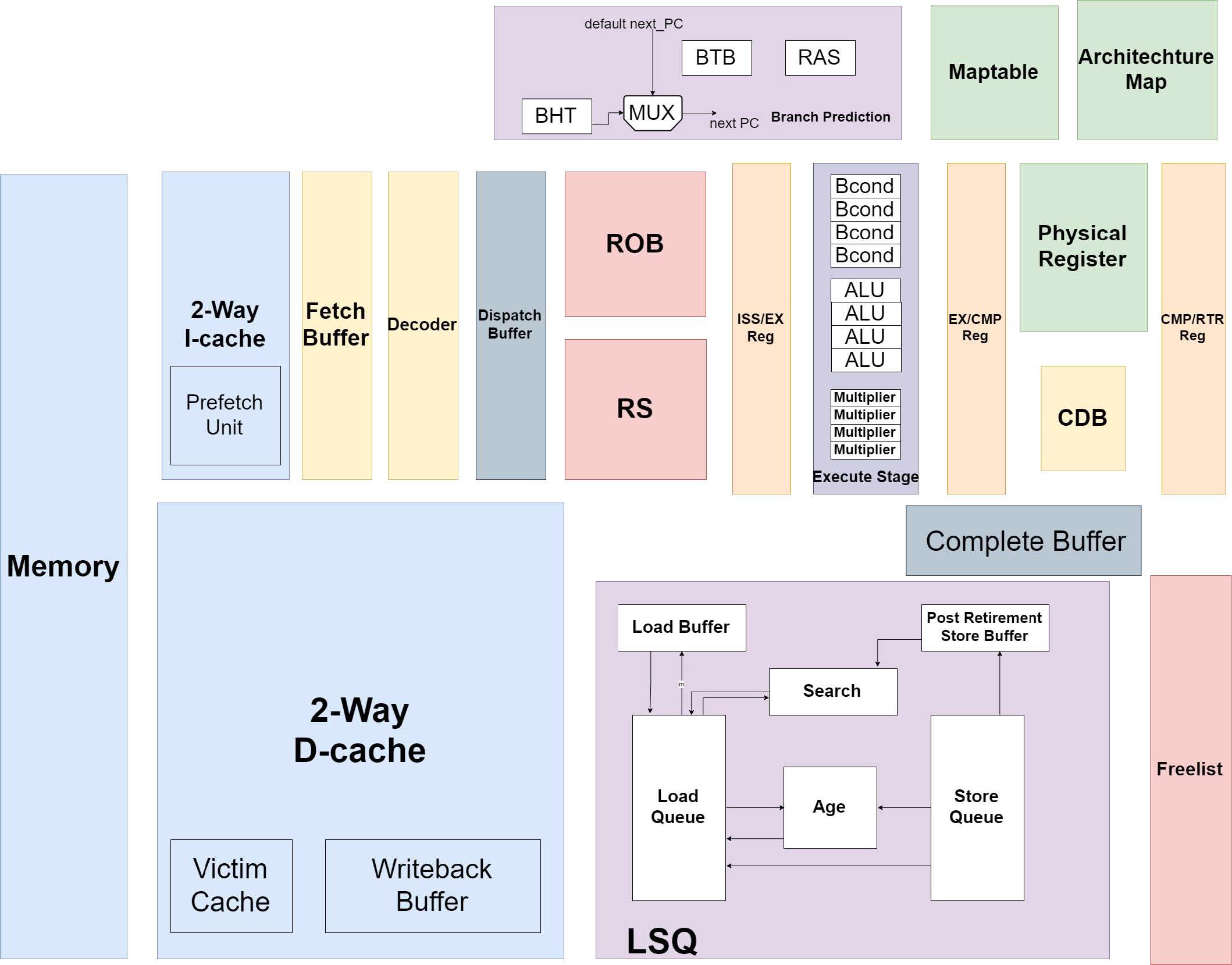

Risc V 4-way Superscalar R10K OoO Processor

About me

This is a page not in th emain menu

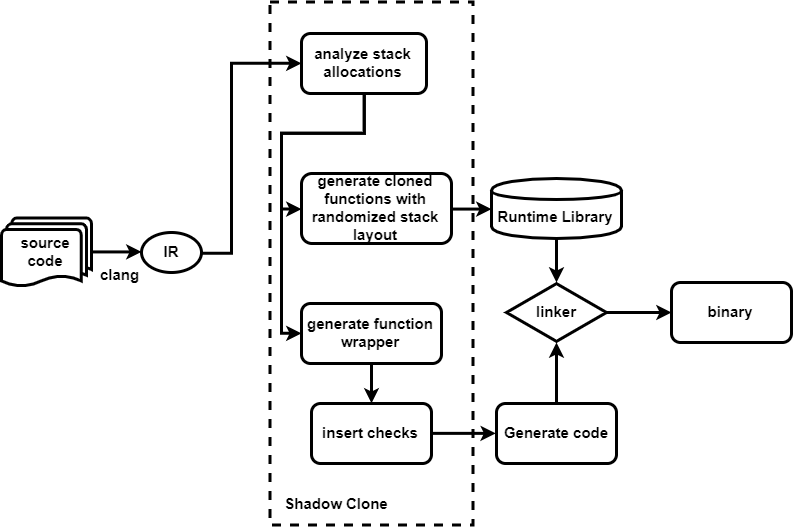

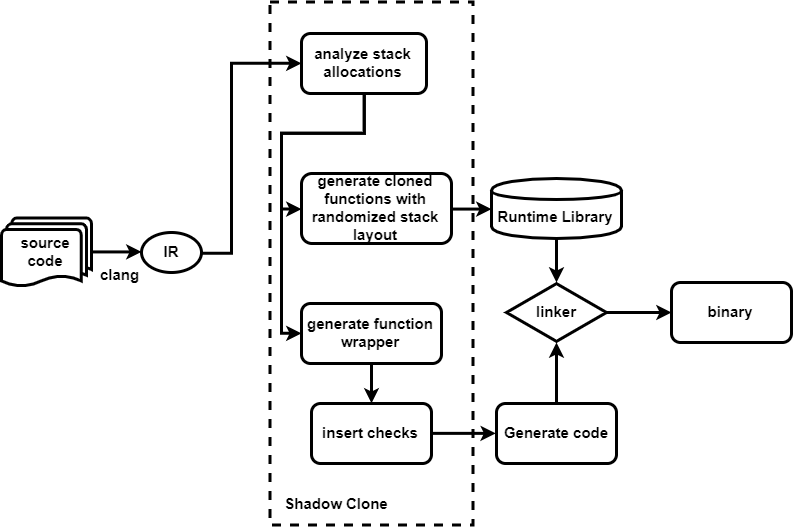

Thwarting and Detecting DOP Attacks with Stack Layout Randomization and Canary

Published:

This reading blog is about three papers in Section 3A: Machine Learning 1 of ISCA 2021.

This accelerator supports mixed precisions for both training and inference: 16 and 8-bit floating-point and 4 and 2-bit fixed point. It imporves both performance(TOPS) and energy efficiency(TOPS/W) at ultra-low preceision. In my opinion, this work contributes more on the engineering part (architecture).

Published:

I was quite busy during this year’s ISCA, so I did not have the chance to read the papers or watch the videos carefully. I will try to read the ISCA 2021 papers that I am interested in, hopefull before MICRO 2021 starts.

Published:

In my point of view, it is basically a spatial dataflow architecture with more general compatiblity.

Published:

Most DNN acclerator papers I read focus on DNN inference rather than training. From this paper, I learned that the bottleneck for DNN training is I/O for fetching data and CPU side for preprocessing.

Published:

This paper is a CPU-FPGA heterogenrous platform for GCN training. CPU will do the communication intensive operations, and leave the computation intensive parts to CPU.

It is challenging to accelerate Graph Convolutional Networks because: (1) substantial and irregular data communication to propagate information within the graph (2) intensive computation to propagate information along the neural network layers (3) Degree-imbalance ofgraph nodes can significantly degrade the performance of feature propagation.

Published:

Published:

Task: We map each node in a network into a low-dimensional space Goal: encode nodes so that similarity in the embedding space (e.g., dot product) approximates similarity in the original network.

Published:

Published:

Published:

Network motifs: recurring, significant patterns of interconnections

Published:

I would like to learn GNN to see if there is any opportunity to optimize/accelerate it. But the surveying paper for GNN has too many expressions, notations which are hard to understand. So I will watch the CS224W Machine Learning with Graphs lecture to learn it in a smooth way. I will write down some key points I learned.

Published:

This is a paper about GPU memory footprint reduction using selective recomputation during LSTM training process. Although I do not know much about LSTM network, this paper is quite clear and easy to understand.

Published:

This is a paper using processor-in-memory(PIM) for NN acceleration in ASPLOS 2020. I know little about PIM techinques. And I don’t know much about the SRAM structure. I learned some basic knowledge of SRAM, PIM from this paper. And I learned a lot of the implementation tools and benchmark measuring tools.

Published:

This paper is an industry-track paper in ASPLOS 2020. I used to read more acedemic paper than industry paper, so I lack the knowledge what the focus of the industry. This paper decribes Centaur Technology’s Ncore, the industry’s first high-performance DL coprocessor technology in-target into an x86 SoC with server class CPUs.

Published:

This paper proposes an accelerator for Data Transformation (DT) in servers in ASPLOS 2020.

Published:

Today I am not going to read a specific paper. Rather, I watch a talk about some innovative ideas and oppotunities for computer architecture. The vedio of the talk can be found here

Published:

This paper studies multiplayer Virtual Reality(VR) on mobile divice in ASPLOS 2020.

Published:

This is another best paper in ASPLOS 2020. “I think it’s the dawn of a new era in space exploration, I thinks a very exciting era,” said Elon Musk. So I am impressed by the edging computing in space.

Published:

This is one of the best papers in ASPLOS 2020. I like the idea of transforming a somewhat unfamiliar problem into a equivalent well-solved problem.

A software-hardware co-design to accelerate DNNs

Risc V 4-way Superscalar R10K OoO Processor

Thwarting and Detecting DOP Attacks with Stack Layout Randomization and Canary